From Analog to Digital: A Journey Through the Evolution of Computers – Exploring Milestones and Innovations

Introduction:

The evolution of computers is a fascinating journey marked by innovation, ingenuity, and technological advancements. From the earliest mechanical devices to the powerful digital systems of today, computers have revolutionized the way we live, work, and communicate. Join us as we explore the milestones and innovations that have shaped this remarkable journey from analog to digital.

From Analog to Digital: A Journey Through the Evolution of Computers

The Birth of Computing: Mechanical Calculators

The journey begins in the 17th century with the invention of mechanical calculators like Blaise Pascal’s Pascaline and Gottfried Wilhelm Leibniz’s stepped reckoner. These early devices paved the way for automated computation and laid the foundation for future developments in computing.

The Advent of Programmable Computers: ENIAC

The 20th century witnessed a monumental leap in computing with the development of ENIAC (Electronic Numerical Integrator and Computer), the world’s first programmable electronic computer. Built during World War II, ENIAC revolutionized computation with its ability to perform complex calculations quickly and accurately.

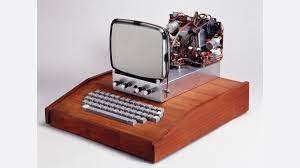

The Birth of the Personal Computer: Altair 8800

The 1970s saw the emergence of the personal computer revolution with the introduction of the Altair 8800 by Micro Instrumentation and Telemetry Systems (MITS). The Altair 8800, featuring an Intel 8080 microprocessor, inspired hobbyists and entrepreneurs to explore the possibilities of home computing.

The Rise of Graphical User Interfaces: Xerox Alto

In the 1980s, Xerox Corporation introduced the Xerox Alto, a revolutionary computer featuring a graphical user interface (GUI) and a mouse. Although never commercially successful, the Xerox Alto laid the groundwork for future GUI-based operating systems like Apple’s Macintosh and Microsoft’s Windows.

The Internet Age: World Wide Web

The advent of the World Wide Web in the early 1990s transformed computing and communication on a global scale. Tim Berners-Lee’s creation of the first web browser and web server laid the foundation for the interconnected digital world we inhabit today, enabling seamless information sharing and collaboration across the globe.

The Era of Mobile Computing: Smartphones and Tablets

The 21st century witnessed the proliferation of mobile computing devices like smartphones and tablets, revolutionizing the way we access information and interact with technology. With powerful processors, high-resolution displays, and advanced connectivity features, smartphones and tablets have become indispensable tools for work, entertainment, and communication.

FAQs (Frequently Asked Questions)

What is the difference between analog and digital computers?

Analog computers process continuous data using physical quantities like voltage or current, while digital computers manipulate discrete data represented in binary form (0s and 1s). Digital computers are more versatile, accurate, and widely used compared to analog computers.

Who is considered the father of modern computing?

Alan Turing is often referred to as the father of modern computing for his pioneering work in theoretical computer science and artificial intelligence. Turing’s conceptualization of the Turing machine laid the foundation for modern computer architecture and programming.

What role did the transistor play in the evolution of computers?

The invention of the transistor in 1947 by William Shockley, John Bardeen, and Walter Brattain revolutionized computing by replacing bulky vacuum tubes with smaller, more reliable electronic components. Transistors enabled the development of smaller, faster, and more energy-efficient computers, paving the way for the digital revolution.

How has cloud computing impacted the evolution of computers?

Cloud computing has transformed the way we store, access, and process data by providing on-demand access to computing resources over the internet. With cloud computing, users can leverage scalable infrastructure and services without the need for costly hardware investments, driving innovation and collaboration in the digital era.

What is quantum computing, and how does it differ from classical computing?

Quantum computing harnesses the principles of quantum mechanics to perform complex calculations at speeds far surpassing traditional computers. Unlike classical computers, which process data using binary bits (0s and 1s), quantum computers use quantum bits or qubits, which can exist in multiple states simultaneously, enabling exponential processing power and solving previously intractable problems.

What is the future of computing?

The future of computing holds exciting possibilities, including advancements in artificial intelligence, quantum computing, edge computing, and biocomputing. These technologies have the potential to revolutionize industries, accelerate scientific discovery, and reshape the way we live and work in the digital age.

Conclusion:

The journey from analog to digital computing is a testament to human ingenuity, innovation, and perseverance. As we continue to push the boundaries of technology and explore new frontiers in computing, we embark on an exciting journey of discovery and transformation, shaping the future of our digital world.